Introduction

The chances are high that you might have heard about ONNX but not sure what it does and how to use it. Don’t worry, you are in right place. In this beginner-friendly article, you will understand about ONNX. Let’s dive-in.

Assume that you built a deep learning model using the TensorFlow framework for face detection. But unfortunately, you might have to deploy this model in an environment that uses Pytorch. How can you handle this scenario? Well, we can think of two ways to handle this:

- convert the existing model to a standard format that can work in any environment.

- convert a model from one framework (TensorFlow) to another desired framework (Pytorch).

This is exactly what ONNX does. Using the ONNX platform you can convert the TensorFlow model to ONNX (an open standard format for interoperability). Then use it for inference/prediction. Alternatively, you can also further convert this ONNX to Pytorch and use the Pytorch model for inference/prediction.

Thanks to the coordinated efforts by partners such as Microsoft, Facebook, and AWS among others. Now, we can work seamlessly without worrying about the underlying framework for building the machine learning model.

ONNX

ONNX stands for Open Neural Network Exchange. It can be used for mainly there different tasks –

- Convert model from any framework to ONNX format

- Convert ONNX format to any desired framework

- Faster inference using ONNX model on supported runtimes

Though it says neural network in the definition it can be used for both deep learning and the traditional machine learning models. So, don’t be confused.

As you can see below, ONNX supports most of the frameworks. So, if you are looking for Pytorch, MXNET, MATLAB, XGBoost, CatBoost models for ONNX conversion refer to the official tutorials.

ONNX Runtime

The below diagram shows the list of all the runtimes ONNX supports. These runtime engines help in high-performance deep learning inference as these come with built-in optimizations. ONNX Runtime is also one of the supported runtime engines which we will be using in this article for inference.

Now that you have got a general idea about ONNX and ONNX Runtime, let’s jump to the main topic of this article. In this article, we will work through Scikit-learn and TensorFlow models as examples by converting them to ONNX format and making inferences using ONNX Runtime.

In the future articles, we will explore how to convert ONNX to any desired framework, optimizations, and transformer models, etc.

Scikit-learn to ONNX

Installation

Code

The below code is straightforward and self-explanatory. We first built the Sklearn model (Step 1), converted it to ONNX format (Step 2), and finally made predictions/inferences using ONNX (Step 3). As you can see, inference using the ONNX format is 6–7 times faster than the original Scikit-learn model. The results will be much impressive if you work with bigger datasets.

For more details on skl2onnx refer to this documentation.

TensorFlow to ONNX

Installation

Code

The below code is also self-explanatory. Even in this case, the inferences/predictions using ONNX is 6–7 times faster than the original TensorFlow model. As mentioned earlier, the results will be much impressive if you work with bigger datasets.

For more details on tf2onnx refer to this documentation.

Others tools

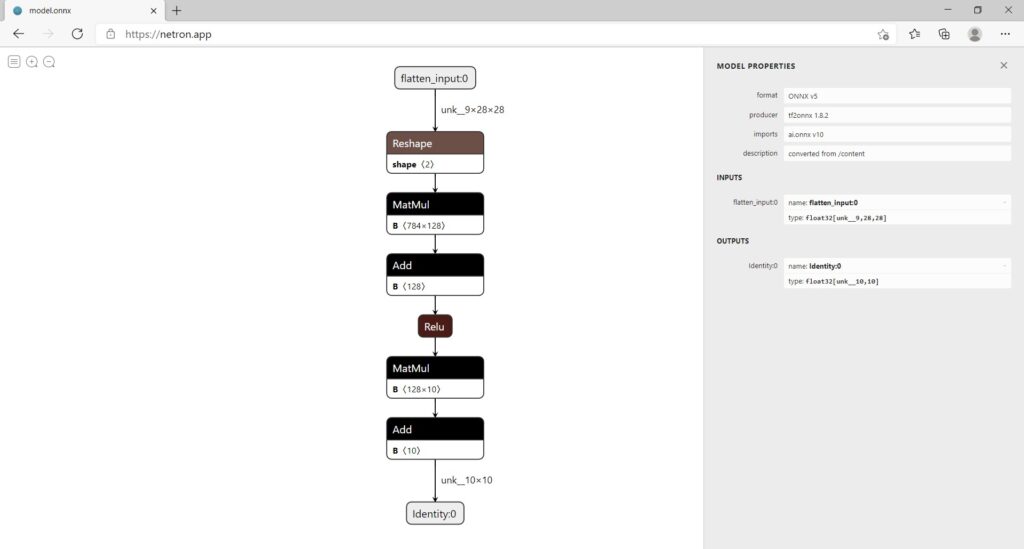

The below screenshot shows the visualization of the TensorFlow deep learning model we created earlier using NETRON.

Conclusion

In this article, you understood what is ONNX and how it will be beneficial to the developers. Then we worked through the examples for ONNX conversion and saw that inferences using ONNX Runtime are much faster than original frameworks. Finally, we visualized the machine learning model using NETRON visualizer.