Introduction

The K-fold Cross-Validation and GridSearchCV are important steps in any machine learning Pipeline. The K-Fold cross-validation is used to evaluate the performance of the model. The GridSearchCV is used to find the best hyperparameters for the model.

In our previous blog post here, we talked about the building blocks of creating the Pipeline such as Pipeline, make_pipeline, FeatureUnion, make_union, ColumnTransformer, etc. with examples. But to keep the article short, we did not touch on how to use K-Fold Cross-validation and GridSearchCV with Scikit-learn Pipelines.

So, the goal of this article is to cover K-Fold Cross-Validation and GridSearchCV with Scikit-learn Pipeline. We will give a brief introduction to both the methods and then go through an example with Scikit-learn Pipeline.

Setup

For the demonstration, we will be using the Loan Prediction dataset from Analytics Vidhya. In the below code, we have created the Scikit-learn Pipeline without using K-fold cross-validation and GridSearch.

If you have read our previous blog then the below code is not a brainer. We have built a RandomForestClassifier using Pipeline, and make_column_transformer (shorthand for ColumnTransformer).

You can find the full code accompanying this article is found here on GitHub.

import numpy as np

import pandas as pd

from sklearn import set_config

set_config(display="diagram")

from sklearn.pipeline import make_pipeline, make_union

from sklearn.pipeline import Pipeline, FeatureUnion

from sklearn.impute import SimpleImputer

from sklearn.compose import ColumnTransformer, make_column_transformer, make_column_selector

from sklearn.preprocessing import OneHotEncoder, StandardScaler

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.preprocessing import FunctionTransformer

from sklearn.metrics import accuracy_score

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.linear_model import RidgeClassifier

df = pd.read_csv('train.csv')

X = df.drop(['Loan_Status'], axis=1)

y = df['Loan_Status']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=0, stratify=y)

numeric_cols = ['ApplicantIncome', 'CoapplicantIncome', 'LoanAmount', 'Loan_Amount_Term', 'Credit_History']

categorical_cols = ['Gender', 'Married', 'Dependents', 'Education', 'Self_Employed', 'Property_Area']

numeric_transformer = make_pipeline(

SimpleImputer(strategy='mean'),

StandardScaler()

)

categorical_transformer = make_pipeline(

SimpleImputer(strategy='most_frequent'),

OneHotEncoder(handle_unknown='ignore')

)

preprocessor = make_column_transformer(

(numeric_transformer, numeric_cols),

(categorical_transformer, categorical_cols)

)

pipe = Pipeline(steps=[

('preprocessor', preprocessor),

('clf', RandomForestClassifier(random_state=42))

])

pipe.fit(X_train, y_train);

y_pred = pipe.predict(X_test)

print("Accuracy:", accuracy_score(y_test, y_pred))

Accuracy: 0.7967479674796748

In the next sections, let’s extend this code by implementing K-fold cross-validation and GridSearchCV with Sklearn Pipelines.

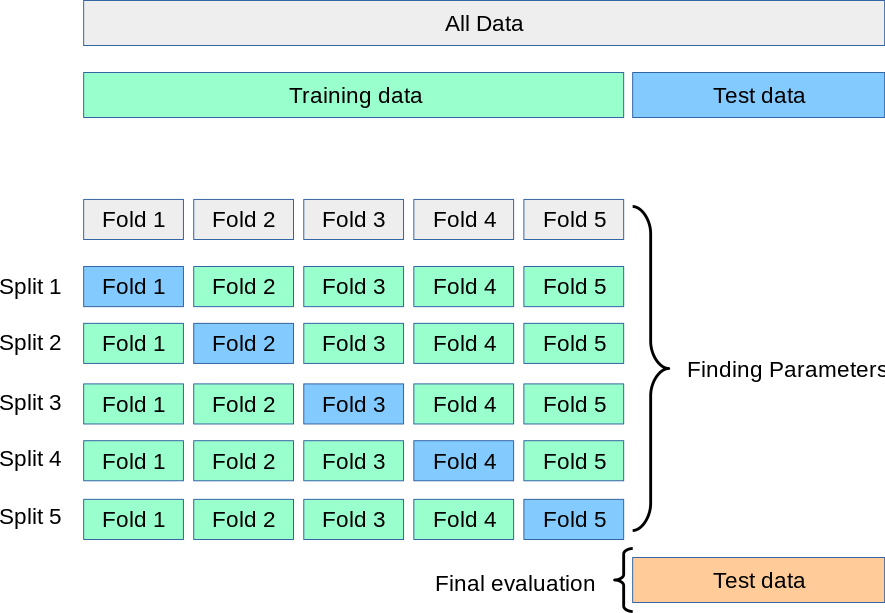

K-fold Cross-Validation

Cross-validation is a technique that is used to evaluate the performance of the model. In cross-validation, the data is split into k-folds, where each fold is used for testing at least once. For example, if 5-fold cross-validation is used, then in the first iteration, fold 1 may be used for testing and folds 2–5 for training. In the second iteration, fold 2 may be used for testing, the rest of the folds for training the model, and so on until all the folds are used for testing at least once.

The below diagram from Scikit-learn clearly explains the idea behind K-fold cross-validation.

There are about 15 different types of cross-validation techniques in Scikit-learn. The most commonly used method is K-fold cross-validation. Let’s see how to use K-fold cross-validation with Scikit-learn Pipeline.

K-fold cross-validation with Pipeline

Syntax:

sklearn.model_selection.KFold(n_splits=5, *, shuffle=False, random_state=None)

- n_splits — it is the number of splits; the default value is 5 i.e. 5 folds

- shuffle — indicates whether to split the data before the split; default is False

- random_state — controls the randomness of each fold

Using K-fold cross-validation with Pipeline is very simple if you already know how to use the Pipeline. Instead of fitting the model and using it for prediction directly, you will be using pipe object for fitting and predicting (refer line# 8 and 9 in the below code).

skf = StratifiedKFold(n_splits=5, shuffle=True, random_state=42)

fold_num = 1

scores = []

for train_index, test_index in skf.split(X, y):

X_train, X_test = X.loc[train_index], X.loc[test_index]

y_train, y_test = y[train_index], y[test_index]

pipe.fit(X_train, y_train)

y_pred = pipe.predict(X_test)

score = accuracy_score(y_test, y_pred)

scores.append(score)

print(f"Accuracy for fold {fold_num}: ", score)

fold_num += 1

Accuracy for fold 1: 0.7886178861788617 Accuracy for fold 2: 0.7967479674796748 Accuracy for fold 3: 0.7560975609756098 Accuracy for fold 4: 0.7235772357723578 Accuracy for fold 5: 0.7704918032786885

GridSearchCV

The process of finding the best hyperparameters for a machine learning model is called Hyperparameter tuning. The hyperparameters are nothing but parameters that are external to the model and they control the learning process of the model. For example, alpha in Ridge or C in LinearRegressor or SVM, etc.

There are different methods for finding the best hyperparameters for your models. GridSearchCV is one of the methods available in Scikit-learn for finding the best hyperparameters. Let’s see how to use GridSearchCV with Scikit-learn Pipeline.

GridSearchCV with Pipeline

Syntax:

sklearn.model_selection.GridSearchCV(estimator, param_grid, *, scoring=None, n_jobs=None, refit=True, cv=None, verbose=0, pre_dispatch='2*n_jobs', error_score=nan, return_train_score=False)

- estimator — Scikit-learn object that implements fit() and predict(). This can also be a Pipeline object.

- param_grid — a dictionary containing the parameter names and a list of values.

- scoring — evaluation metric to validate the performance on the test set

- refit — if set to True, the model will be refit with the best-found parameters. The default is True.

- cv — it is a cross-validation strategy. The default is 5-fold cross-validation.

In order to use GridSearchCV with Pipeline, you need to import it from sklearn.model_selection. Then you need to pass the pipeline and the dictionary containing the parameter & the list of values it can take to the GridSearchCV method. When using GridSearchCV with Pipeline you need to append the name of the estimator step to the parameters. For this example, you need to append clf__ to the parameter name. The clf__ is the name we had given to the estimator. It can be any string of your preference. Refer to the code below.

Another important feature of GridSearchCV is that it allows you to run K-Fold cross-validation by setting the cv parameter. In the below code, cv was set to 5 (i.e. 5-fold cross-validation). Since we passed 3 values to n_estimator, 4 values to max_depth, and cv=5, the following code fit model 60 (3 x 4 x 5) times. Finally, you can use best_score_ to get the best score and best_params_ to get the best parameters that resulted in the best score.

from sklearn.model_selection import GridSearchCV

params={

'clf__n_estimators':[100, 200, 500],

'clf__max_depth': [5, 6, 7, 8]

}

grid_pipe = GridSearchCV(pipe,

param_grid=params,

cv=5,

verbose=1)

grid_pipe.fit(X_train, y_train)

print(grid_pipe.best_params_)

print(grid_pipe.best_score_)

Fitting 5 folds for each of 12 candidates, totalling 60 fits

{'clf__max_depth': 6, 'clf__n_estimators': 100}

0.8070294784580498 Summary

In the previous blog post here, we have covered Scikit-learn Pipeline in detail. In this post you have understood how to implement K-fold Cross Validation and GridSearch CV using Scikit-learn Pipeline. In the future article about Pipelines, we will introduce you to different built-in transformers from Scikit-learn, how to create custom transformers, and how to access the output of individual transformers, etc. Stay tuned !